3 Reasons Why AI Promises May Fall Short

Tyler J. R.

Published October 21, 2025

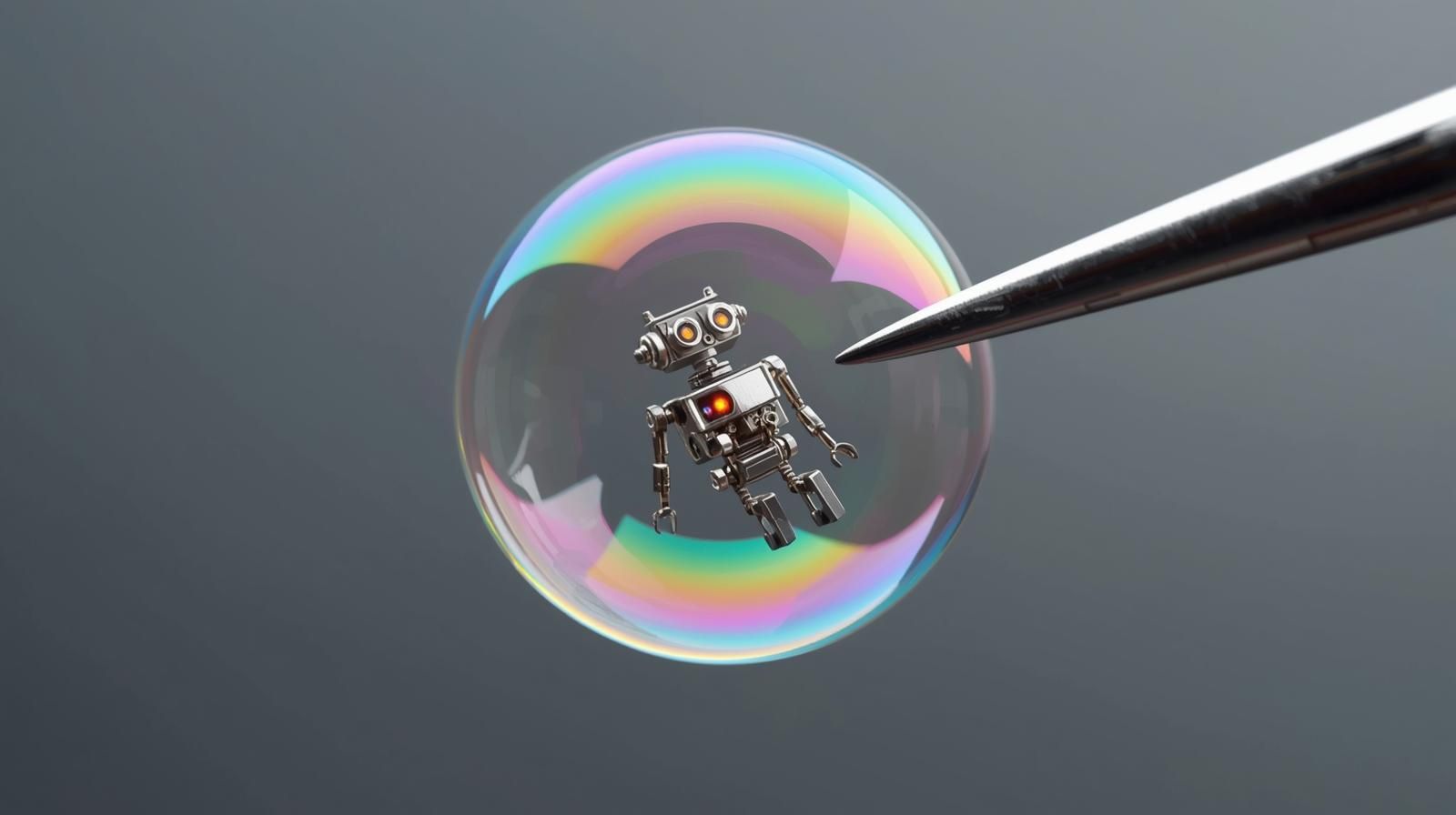

The debate between believers and skeptics of today’s AI valuations has become one of the most polarized in modern finance. Some argue that artificial intelligence represents a once-in-a-century innovation wave, comparable to the advent of electricity or the internet (or my personal favorite, the Industrial Revolution).

They contend that markets are simply pricing in the unprecedented scale of transformation that AI will unleash across industries, from healthcare and logistics to entertainment and education. To them, the trillion-dollar valuations of firms like NVIDIA and Microsoft are not speculative but anticipatory, a rational reflection of future cash flows derived from AI’s inevitable ubiquity. On the other side, you have those who see the current euphoria as a self-reinforcing narrative detached from financial fundamentals.

They point out that most AI applications remain experimental, unprofitable, or heavily subsidized by cloud credits and venture capital. The revenue concentration in a handful of infrastructure providers, coupled with the lack of clear monetization paths for downstream startups, mirrors the same imbalance that defined past bubbles — when investors priced “promise” as if it were profit.

The AI Promises

The key to whether the believers or non-believers are correct is the word “promise”. Financial commentator Mohamed El-Erian, in a recent interview, stated that AI is in a “rational” bubble. He continues to describe the rationale of the bubble being built upon the promises of AI. One thing is for sure, and that is there is no shortage among Wall Street and tech leaders on what Generative AI will bring to the world from a financial perspective. Some of the promises include:

"AI will make more millionaires in 5 years than the Internet did in 20.

~Jensen Huang (CEO of NVIDIA Corporation)

“AI software and services alone could generate up to $23 trillion in annual economic value by 2040.”

~McKinsey & Company

“Generative AI could deliver total value in the range of $2.6 trillion to $4.4 trillion annually when applied across industries.”

~McKinsey & Company

“AI-related infrastructure spending … expected to hit more than $2.8 trillion through 2029.”

~Citigroup Inc.

“The global AI market currently nearing $400 billion and could generate $15 trillion by 2030.”

~Global market analysis (various reports)

“AI agents are a multi-trillion-dollar opportunity.”

~Jensen Huang (CEO, NVIDIA Corporation)

“The world’s first trillionaires are going to come from somebody who masters AI and all its derivatives and applies it in ways we never thought of.”

~Mark Cuban

The promises of unfathomable wealth are endless, but one must ask about the validity of some of these statements on the return value that Generative AI will produce for the economy. Are we in a “rational” bubble as Mohamed suggests, or are we setting ourselves up for substantial disappointment?

AI is not a “Rational” Bubble

While the prospects of AI are extraordinary and will no doubt change industries as we know them, the financial promises are far-fetched, and these are the three reasons why this is the case

1. The Eye-Watering Cost to Support Generative AI

Despite the narrative of infinite digital scale, generative AI is among the most capital-intensive technologies ever deployed. Every interaction—whether a ChatGPT query, an image generation, or a code completion—runs on vast clusters of GPUs that consume staggering amounts of electricity, bandwidth, and cooling. NVIDIA’s high-end chips, such as the H100 and B200, sell for $25,000–$40,000 each, and a single large-language-model training run can require tens of thousands of these GPUs operating for weeks, pushing costs for model training into the tens or hundreds of millions of dollars.

The ongoing expense doesn’t stop there: inference (serving users’ requests) now costs more than the training itself for many providers, with estimates suggesting each ChatGPT conversation can cost OpenAI several cents in compute, an unsustainable margin at internet scale. Data centers must be upgraded with ultra-dense fiber networks, power-hungry cooling systems, and massive new grid capacity—Microsoft’s AI expansions alone are expected to require billions in new energy and water infrastructure.

The scale of spending has become so immense that Sam Altman himself has called for “trillions of dollars” to build global AI infrastructure, including fabrication plants and power generation dedicated to AI workloads. Cloud providers are racing to secure supply, locking in multi-year commitments with NVIDIA and AMD that strain balance sheets.

The hidden irony is that AI—marketed as an efficiency revolution—is currently one of the least efficient technologies ever created, devouring physical resources to produce digital outputs. These escalating costs mean that the AI ecosystem remains heavily dependent on subsidization by deep-pocketed firms like Microsoft, Google, Amazon, and venture capital funds, raising the question: if margins depend on perpetual capital infusion, how rational are the valuations built upon this structure?

2. “Hallucinations” Make AI Create More Work than Take Away

One of the paradoxes of generative AI is that its hallucinations often create more work rather than less. Large language models are trained to produce the most statistically likely continuation of text, not verified truth. As a result, they frequently generate confident but incorrect information—fabricated citations, imaginary legal precedents, misleading summaries, or subtly wrong code.

In professional settings, this means that AI-assisted output cannot be trusted at face value; every result requires verification, editing, and often complete rework. Lawyers who have used AI to draft motions have been sanctioned for submitting fictitious case law; journalists have had to fact-check AI-generated copy line by line; software engineers often spend more time debugging AI-written code than they would writing it themselves. In short, the productivity illusion collapses under the weight of human oversight. Instead of automating expertise, generative AI often outsources error, transferring cognitive load from creation to correction.

This phenomenon can make workflows longer, not shorter, especially in domains where precision and accountability are non-negotiable. Far from eliminating human labor, hallucinations create a hidden layer of supervision work that erodes the very efficiency gains the technology promises.

Knowing how to reduce hallucinations effectively is key to improving the productivity of Generative AI. This can be done in a variety of ways, but just throwing AI into generic tasks will yield a great number of hallucination,s which will more than likely create more work than not using AI at all.

3. Generative AI has yet to Produce Results

While the rhetoric around artificial intelligence promises exponential returns, the financial results have yet to materialize for most adopters. A 2024 study from the MIT Sloan School of Management and Boston Consulting Group found that nearly 95% of companies experimenting with AI have not seen any measurable financial benefit from their investments so far.

Despite unprecedented spending on AI tools, infrastructure, and personnel, the vast majority of firms remain stuck in pilot phases—struggling to integrate models into existing workflows, ensure data quality, or align AI outputs with business outcomes. The study noted that only a small fraction of organizations had reached the scale or maturity necessary to realize productivity gains, and even those gains were marginal compared to expectations. This disconnect between massive capital expenditure and limited realized value underscores how early the commercial phase of AI truly is.

In financial terms, most AI initiatives remain cost centers rather than profit centers, driven more by fear of missing out than by proven returns. For investors who believe AI-driven productivity will soon flood balance sheets with new revenue, the empirical evidence so far suggests a very different story.

Note, this does not imply that AI does not have a place in the business world (quite the contrary). However, AI is simply a tool, much like a calculator to the mathematician. The mathematician may know calculus, but if they do not understand how to use the calculator to assist in their everyday work, then the calculator would become more of a burden than a blessing to said person.

The AI Bubble Promises are not “Rational”

In conclusion, while the grand spending on the AI boom bubble may be rational under the assumption that AI can create the economic environment described by industry leaders, the promises of said leaders are irrational according to the given data. This lack of rationality would not be dangerous if a large part of the US economy did not depend on these promises coming true in the near future. It is highly critical that companies take time to gain a full understanding of how AI (more importantly, Large Language Models) work. If care is not taken, AI can be a severe and costly mistake.